In the last 6 years we’ve gone from ‘Software is eating the world’ to ‘AI is eating software’. Yes, tremendous advances in machine learning have ushered in the Second Wave of AI, and increasingly CEOs are advised that every company needs to become an AI company.

But what is AI? For now, it is many disparate things: Speech and image recognition, autonomous vehicles, sentiment analysis, process automation, chatbots, and many other (somewhat) smart applications. Realistically though, there isn’t all that much intelligence in ‘artificial intelligence’ yet. At this time AI is typically quite narrow and rigid.

Ultimately, we expect intelligent entities to understand us, and the world. One key aspect of ‘real AI’ is good natural language competency. But natural language is hard. Not for us, but for an AI.

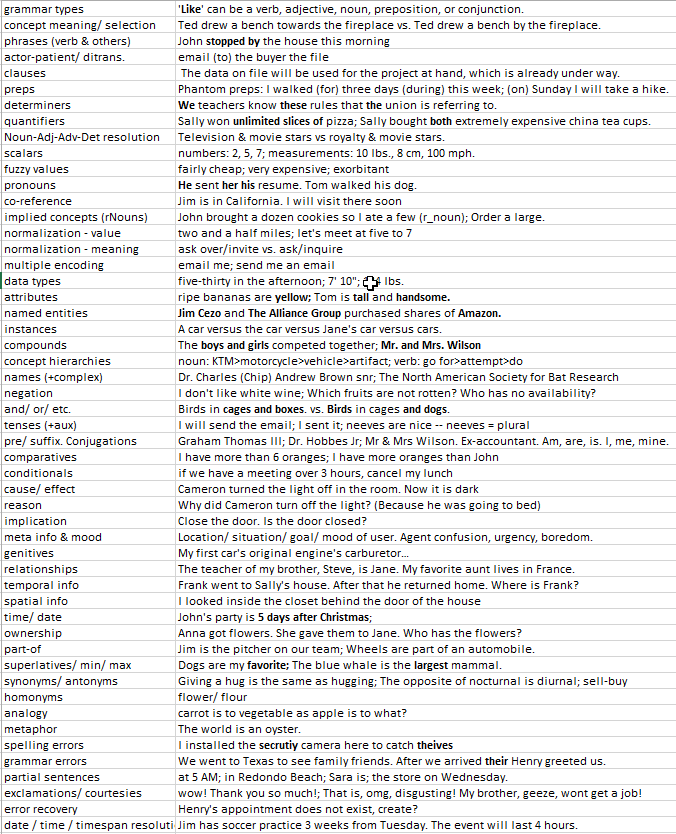

Below is a short list of common aspects of language that I quickly compiled from memory in about half an hour. Any AI wanting to understand language, or to converse effectively, needs to be able to master these, and a lot more.

Or consider the language ability of a 6-year-old child. If I spoke just these 6 words: “My sister’s cat Spock is pregnant”, she would understand, and immediately learn at least four facts (Peter has a sister; she has a cat; named Spock; she is pregnant) — and probably be surprised that ‘Spock’ turned out to be female. She would be able to use that new knowledge in conversations that follow, and a week later may ask if the kittens have arrived.

Contrast this with state-of-the-art Alexa or Siri: They won’t understand anything, and will remember nothing — in fact you’ll be lucky to get a Star Trek episode as a response…

Limitations of Current AI

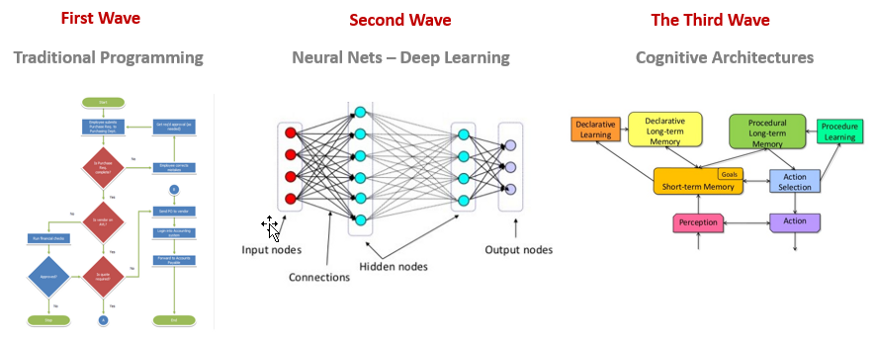

The vast majority of AI applications these days are based on a combination of traditional programming (flowchart-like logic and/or rules) and machine learning (ML)(deep neural networks and other statistical methods).

Take for example a chatbot or ‘personal assistant’ app: Typically, you’ll have a ML categorizer to establish the ‘intent’ of an utterance — essentially something that will force your input (stimulus) into one of maybe a hundred or thousand (response) ‘buckets’: If what you say includes something like ‘weather’ or ‘need umbrella’ it will probably put that in the ‘weather forecast’ bucket.

Given enough training data and a good implementation, this utterance categorization can work surprisingly well — especially if the user has been ‘trained’ to use the right magic keywords.

The second part of the interaction, the response part, is usually custom programmed either directly in code, or indirectly via some higher level development toolkit. Here the trick is to extract relevant parameters (keywords and phrases) to be able to complete the task at hand (e.g. the city and date of your weather request), or to prompt for missing information.

Here things get more tricky; the program has to blindly pick keywords with little or no memory of what has transpired, personal history, common sense, or conceptual understanding. So you can get conversations like this:

Customer: “My flight to Dallas in March on United was a disaster.”

Bot: “Ok, I’ll book a flight to Dallas on United. When in March would you like to travel?”

It gets worse.

While statistical ‘intent’ categorization plus programmatic keyword extraction may have an overall accuracy of 80 to 90%, once you try stringing them together for any meaningful ongoing conversation, success rate rapidly plummets: After just 4 interactions you may find yourself below 50% (chance) of success.

A Better Approach

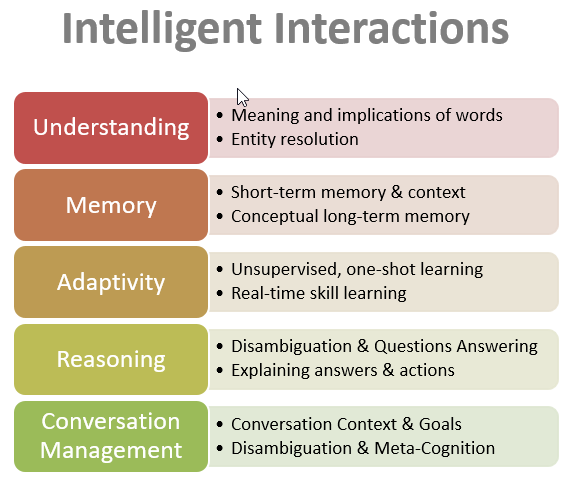

Fortunately we are starting to move past these limitation — a Third Wave of AI is beginning to address this lack of intelligence and functionality. The key difference is to base language (and other AI) applications on a comprehensive cognitive architecture, or intelligence engine. Using this approach we’ve already demonstrated the ability to provide all the key functionality that long, ongoing, intelligent conversations require (see summary below).

Cognitive architecture not only enable intelligent interactions, but are also needed to make sense of articles, reports, and other text like contracts and research papers. Statistical methods like deep learning and word vectors can provide passable translation and sentiment analysis, but cannot comprehend specific text details — for one, they cannot learn and reason about dynamic entities and relationships.

In order to have deep understanding of a given text, the system needs to take into account each single word, and its meaning in the context not only of the current sentence, but also of the overall topic and what has come before. Furthermore it needs to be able to reason about different interpretations in order to select the correct one. In practice, the AI must also be able to ask for clarification, and to be able to look up relevant background material autonomously. It needs to build an internal semantic model of the material.

Finally, the ability to answer questions about its knowledge, to discover contradictions in text, and to produce summaries again rely on the core cognitive abilities of an intelligence engine.

In summary, it has become clear that flowchart-like programming (The First Wave) cannot possibly deal with the complexities of human language. More recently we’ve learned that even massive data-driven machine learning approaches (The Second Wave) are limited to mediocre translation, ‘intent’ classification, sentiment analysis, and the like. These systems cannot understand text or have ongoing conversations. Cognitive architectures (The Third Wave), on the other hand, have already demonstrated their ability to more comprehensively and effectively handle natural language. As the Third wave matures, we will be moving into a world where AI is not just ‘eating the world’, but truly enhancing our lives.