Let’s start at the beginning. Why do we even need this term?

In 1956 when the term ‘AI’ was coined, the ambition was to build machines that can learn and reason like humans: incrementally, conceptually, interactively, in real time. The idea was that having machines that could automate tasks and help solve difficult problems would surely be of great benefit to society.

However, over several decades of trying and failing (badly), the original vision was largely abandoned, and the field of ‘AI’ morphed into ‘Narrow AI’ — solving one particular problem at a time, be it chess, chatbots, speech recognition, or image generation.

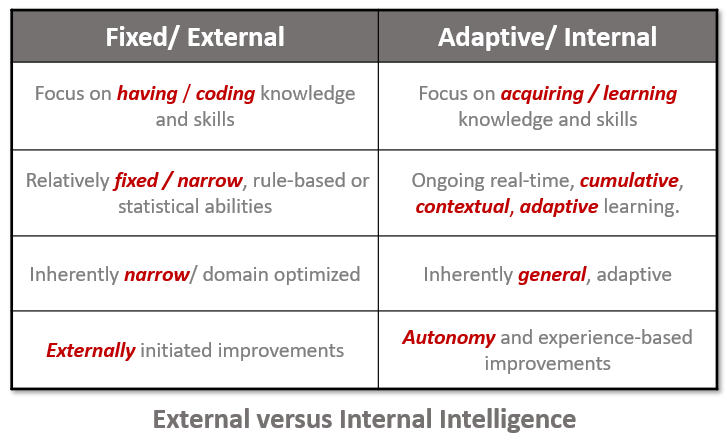

A subtle but crucial point, not widely appreciated, is that these selected problems are actually solved by the engineers and data scientists figuring out how to program a computer, or to massage data and parameters to achieve the desired result. It is external intelligence solving the problem, not the ‘AI’ program itself.

Relatedly, the focus shifted to systems having knowledge rather than the ability to acquire it. It led to software optimized to solving sets of fixed, predefined problems. Any changes of requirements or environment outside of the original scope require the application of additional human intelligence.

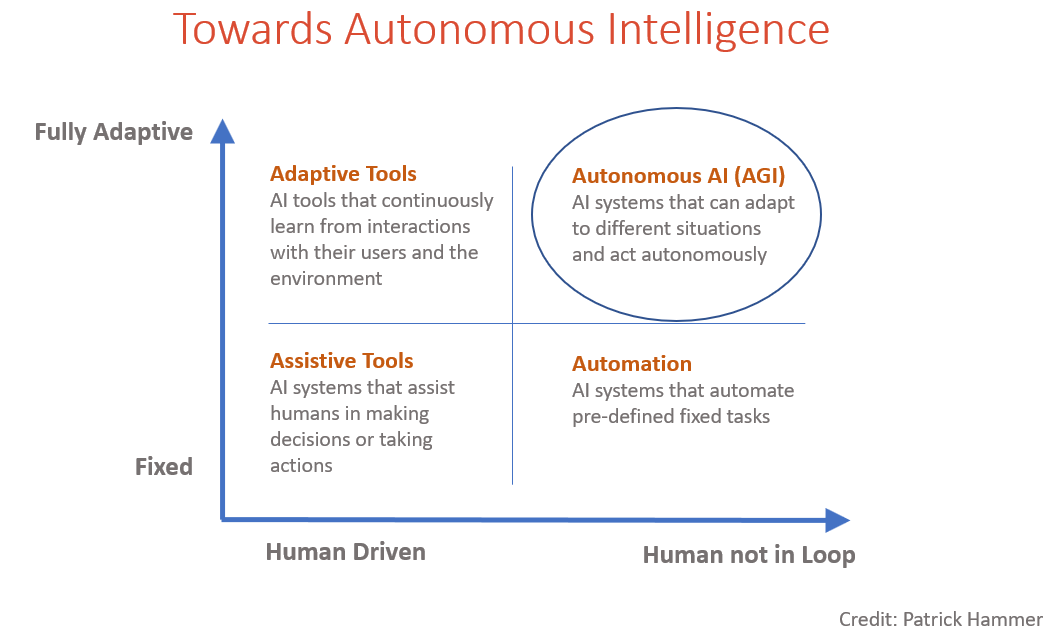

Another useful way to look at goals and realities of AI is to compare the degree of adaptiveness and autonomy. Current AI efforts including Generative AI reside almost exclusively in the bottom left quadrant.

As a consequence, most computer scientists today only know AI from this dramatically watered-down perspective.

Around 2001 several of us felt that hardware, software, and cognitive theory had advanced sufficiently to rekindle the original vision. At that time we found about a dozen people actively doing research in this area, and willing to contribute to a book (ref) to share ideas and approaches. In 2002, after some deliberation, three of us (Shane Legg, Ben Goertzel and myself) decided that ‘Artificial General Intelligence’, or AGI, best described our shared goal. We felt that we wanted to give our community a distinctive identity, to differentiate our work from mainstream AI which was unlikely to lead to general autonomous intelligence. A simple description of AGI is:

A computer system that can learn incrementally, by itself, to reliably perform any cognitive task that a competent human can – including the discovery of new knowledge and solving novel problems. Crucially, it must be able to do this in the real world, meaning: in real time, with incomplete or contradictory data, and given limited time and resources.

As examples, it would be able to learn and update new professional and scientific domains in real time through reading, coaching, practice, etc.; conceptualize novel insights; solve complex goal-directed multi-step problems; apply existing knowledge and skills to new domains. Naturally it would also have an excellent ability to learn to use a wide variety of tools. For more details and examples see AGI Checklist.

Given the many technical advantages that computers have over humans, AGIs will generally exceed human cognitive ability. For one, we are not that good at thinking rationally — rationality is really an evolutionary afterthought.

On the other hand, it would not necessarily have a high degree of sense acuity or dexterity. I call this approach the ‘Helen Hawking theory of AGI’ — Helen Keller and Stephen Hawking were highly intelligent in spite of their limitation. Note that an initially limited version of AGI would enable the rapid development of highly capable robots; it’s the ‘brain’ that’s all important.

One unfortunate misconception about AGI is that it’ll be ‘god-like’ — i.e. be able to solve any and all problems. This is just flat out wrong. Chaos theory and quantum uncertainty ensure that most real-world problems are ultimately intractable. As a simple example, we’ll never be able to perfectly predict the weather a week ahead.

The ‘G’ in AGI alludes to ‘g’ used in psychometrics to denote ‘general human cognitive ability’, our ability to adaptively learn a very wide variety of tasks and solve a wide variety of problems, not to some magical, theoretical ability to solve any scientific, mathematical or logic problem whatsoever.

Another common misunderstanding is that AGI needs to be human-like beyond its desired cognitive abilities. No, it does not need to have all the quirks of human development and emotion. A good analogy is that we’ve had ‘flying machines’ for over 100 years, but are nowhere near reverse engineering birds. With AGI we are interested in building ‘thinking machines’, not reverse engineering human brains.

Abuse of the term

Over the years the term ‘AGI’ has gathered good momentum and is now widely used to refer to machines with human, or super-human level capabilities (wiki). But therein lies the problem: It now being used indiscriminately for marketing and fundraising for anything that remotely resembles ‘AGI’. For example, systems that match or exceed human abilities in only one or a few domains or benchmarks. Well, we’ve had calculators for 100’s of years!

A particularly egregious example of abuse is the CEO of a large LLM company saying that “We’ll have AGI soon… [but] It will change the world much less than we all think…” (ref). He’s clearly not talking about ‘Real AGI’.

Some people have suggested using ‘AGI’ for any work that is generally in the area of autonomous learning, ‘model-free’, adaptive, unsupervised or some such approach or methodology. I don’t think this is justified, as many clearly narrow AI projects use such methods. One can certainly assert that some approach or technology will likely help achieve AGI, but I think it is reasonable to judge projects by whether they are explicitly on a path (have a viable seeming roadmap) to achieving the grand vision: a single system that can learn incrementally, reason abstractly, and act effectively over a wide range of domains — just like humans can.

Alternative definitions of ‘AGI’ and their merits

“Machines that can learn to do any job that humans currently do” — I think this fits quite well, except that it seems unnecessarily ambitious. Machines that can do most jobs, especially mentally challenging ones would get us to our overall goal of having machines that can help us solve difficult problems like ageing, energy, pollution, and help us think through political and moral issues, etc. Naturally, they would also help to build machines that will handle remaining jobs we want to automate.

“Machines that pass the Turing Test” — The current Turing Test asks too much (potentially having to dumb itself down to fool judges that it is human), and too little (limited conversation time). A much better test would be to see if the AI can learn a broad range of new complex human-level cognitive skills via autonomous learning and coaching.

“Machines that are self-aware/ can learn autonomously/ do autonomous reduction/ etc.” — These definition grossly underspecify AGI. One could build narrow systems that have these characteristics (and probably have already), but are nowhere near AGI (and may not be on the path at all).

“A machine with the ability to learn from its experience and to work with insufficient knowledge and resources.” — Important requirements but lacking specification of the level skill one expects. Again, systems already exist that have these qualities but are nowhere near AGI.

So how do we get to AGI?

The incredible value of ‘Real AGI’ is obvious to many people. Here’s a quote from the CEO of Google:

“AI is probably the most important thing humanity has ever worked on. I think of it as something more profound than electricity or fire.” (ref)

Given this potential to boost human flourishing raises some obvious questions:

“Why are so few people working directly on achieving AGI?“

“What is the most direct path to AGI?”

and, if it is possible now:

Please follow the links above for some answers, and join us to help make AGI happen.

Peter Voss — CEO and Chief Scientist, Aigo.ai