Microsoft is re-commissioning the Three Mile Island nuclear power plant in order to feeds just one of its next generation AI data centers [ref].

Anthropic.ai’s CEO predicts that we’ll soon need models that cost $100 billion [ref].

Sam Altman is seeking $7 trillion to achieve AGI [ref].

Does this make sense?

Yes, LLMs will continue to get better, faster, and cheaper.

Yet they will not get us to AGI.

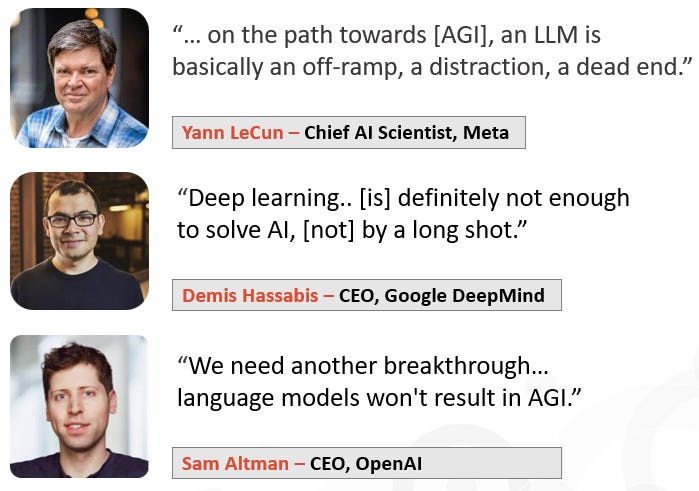

But don’t take my word for it:

Yann LeCun [ref], Demis Hassabis [ref], Sam Altman [ref].

And it’s not that they ‘just need another breakthrough’ to get to AGI, no, these limitations run much deeper: Apart from the inherent problem of hallucinations and lack of robust reasoning, the more serious and fundamental problem is that they cannot learn (update their core model) incrementally in real time. This means that even a $100 billion model cannot adapt to new data and has to be thrown away on a regular basis, and a new model built from scratch. This limitation is dictated by the ‘Transformer’ technology that all LLMs are based on, and that is core to their impressive capabilities.

GPT: Generative (makes up stuff). Pretrained (read-only). Transformer-based (requires bulk back-propagation like training).

Now consider that a child will learn language and basic reasoning with at most a few million words — not 10s or 100s of trillions of words.

Also consider that our brains operate on about 20 watts — they don’t need nuclear-level power to ‘build their model’.

So from first principles we know that human-level intelligence is possible with orders of magnitude less power and data.

How can we achieve this with AI?

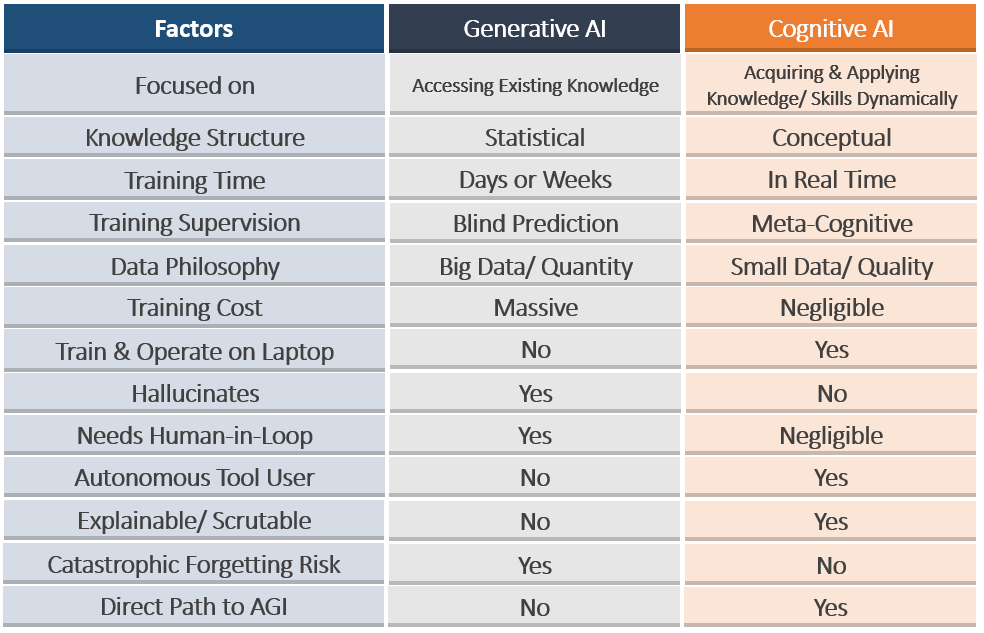

The key is starting with a clear understanding of what makes human intelligence so powerful. This is a Cognitive AI approach, as opposed to big-data Statistical AI.

Cognitive AI (also referred to by DARPA as “The Third wave of AI”) starts with identifying the core requirements of human intelligence.

These include the ability to learn incrementally in real-time, to learn conceptually, and to be able to learn from very limited data. For example, a child can learn to recognize, say, a giraffe with only a single picture exposure — we don’t need hundreds of examples. We can also generalize situations with just a few examples, and apply this knowledge in very diverse circumstances (this is called ‘transfer learning’). Importantly, we also monitor and control our thinking and reasoning via meta-cognition: we are able to think about our thinking.

INSA (Integrated Neuro-Symbolic Architecture) is a practical example of this Cognitive AI approach which promises to achieve AGI that will require many orders of magnitude less data and power for training — and that can learn and adapt continuously. No need to continually discard models and build new ones from scratch.

A sane approach to AGI.